OpenAI tick-marked an array of developments this month, one of which focuses on improving software security through Artificial Intelligence (AI). The AI firm, on October 30, unveiled “Aardvark” — an AI agent that can detect security loopholes into software programmes.

OpenAI’s most advanced AI model GPT-5 will be powering Aardvark. The service will essentially let developers get an AI-base analysis of their codes to identify potential vulnerabilities in codebases. The tool will inform the developers how their codes could be exploited and suggest workable fixes for them.

In an official statement, OpenAI explained Aardvark as an autonomous agent that could fix security vulnerabilities at scale just like how a human security researcher would.

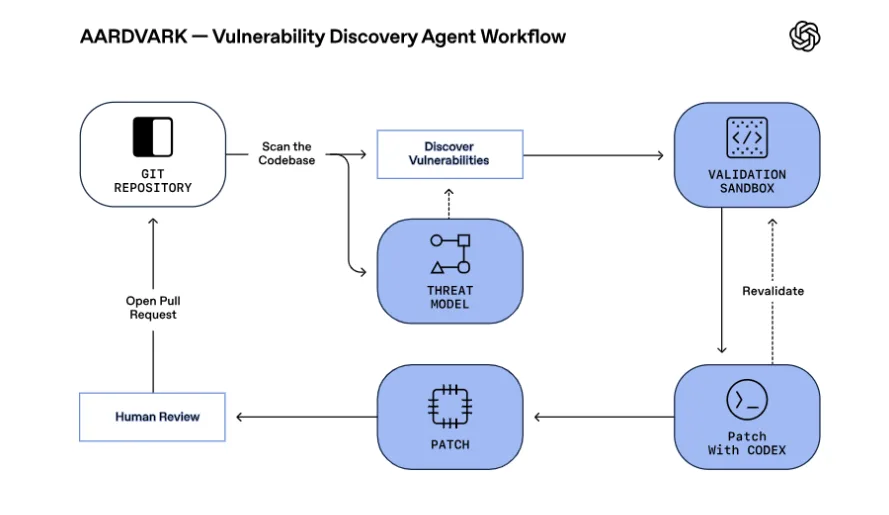

Explaining how it works, the Sam Altman-led AI firm posted a flow-chart showing how the system would patch vulnerabilities after analysing the codebase, discover vulnerabilities, and validate the codebase via threat model.

Source: OpenAI

“Aardvark does not rely on traditional program analysis techniques like fuzzing or software composition analysis. Instead, it uses LLM-powered reasoning and tool-use to understand code behavior and identify vulnerabilities,” OpenAI said.

For now, the tool has been made live only in the beta mode. OpenAI has invited select partners to contribute to this private testing. Applications for developers to be part of the trials are also open.

“In benchmark testing on ‘golden’ repositories, Aardvark identified 92 percent of known and synthetically-introduced vulnerabilities, demonstrating high recall and real-world effectiveness,” OpenAI added. “Our testing shows that around 1.2 percent of commits introduce bugs—small changes that can have outsized consequences.”

OpenAI is exploring new ways to integrate AI into day-to-day usecases as it eyes a $1 trillion valuation for IPO slated for 2026. The platform has revised its deal with Microsoft recently that has cleared the path for it to pivot towards a “for-profit” business model.